Ever watched a foreign film where the actors' mouths are moving completely out of sync with the dubbed dialogue? It's distracting, right? That jarring mismatch is precisely the problem AI lip sync technology solves.

Think of it as digital puppetry, but for a person's mouth. The AI listens to a new audio track—say, a Spanish dub for an English video—and then meticulously alters the speaker's lip movements in the video to match the new words perfectly. The goal is to make it look like the person was speaking that language all along.

What Is AI Lip Sync and How Does It Work?

At its core, AI lip sync is about creating a believable and seamless viewing experience. It tackles that age-old issue of out-of-sync audio that can pull an audience right out of the moment, making dubbed content feel cheap or unnatural.

The magic happens through some pretty sophisticated deep learning models. These algorithms analyze an audio file, break it down into individual sounds, and then generate the corresponding mouth shapes. They then map these new movements onto the speaker's face in the video, creating a fluid, natural-looking result.

The Core Mechanics

This isn't just a simple animation trick. It's a complex process that relies on a few key steps to get right. Advancements in neural networks have been the real game-changer here, taking us from clunky, robotic-looking mouths to the hyperrealistic results we see today. The foundational techniques are similar to those used for animating pictures with AI, but with a focus on dynamic speech.

Here’s a simplified look at how it typically works:

- Audio Analysis: First, the AI breaks down the new audio track into its basic phonetic components, called phonemes (like the "k" sound in "cat").

- Facial Mapping: Simultaneously, it scans the original video to identify and track key facial landmarks, especially around the mouth, jaw, and cheeks.

- Movement Generation: This is where the AI does its heavy lifting. It predicts and generates the precise mouth shapes and movements needed to form the sounds from the new audio.

- Video Synthesis: Finally, it seamlessly blends these newly generated mouth movements back into the original video footage, making sure the lighting and head position remain consistent.

Pushing the Boundaries of Realism

The technology has come a long way in a very short time. Early versions were okay, but modern systems are astonishingly realistic.

A major leap forward has been the use of multi-encoder frameworks. Instead of trying to process everything at once, these systems handle the audio, the person's appearance, and their facial structure as separate streams of data. A final "decoder" then brings it all together to create the final, synthesized lip movements. This method produces much more accurate and nuanced results.

Thanks to this and better training data, today's tools can even handle videos with multiple people, automatically detecting who is speaking at any given moment. This opens the door to much more than just simple, single-speaker videos.

The Technology Behind Perfect AI Lip Sync

Think of AI lip sync technology as a master digital puppeteer. It doesn't just move a character's mouth; it meticulously re-animates it, frame by painstaking frame, to perfectly match a new audio track. This whole process is a beautifully complex dance between analyzing sound and generating visuals.

It all begins with the audio. The AI doesn’t just hear words; it breaks down the soundwave into its most basic ingredients. These are the distinct units of sound that make up speech, known as phonemes. Every language is built from a unique set of these building blocks—like the 'k' sound in 'cat' or the 'ch' in 'chair'.

Once the AI has a complete blueprint of the sounds, its next job is to translate that into what we see.

From Sounds to Shapes: The Phoneme-Viseme Connection

For every sound (phoneme), there’s a corresponding mouth shape, which we call a viseme. It's a one-to-many relationship. For instance, the sounds /p/, /b/, and /m/ all look the same because you have to press your lips together to make them. They all share a single viseme.

The AI's core task is to create a flawless map, connecting every single phoneme it detected in the new audio to the correct viseme. This is where the magic, and the machine learning, really kicks in. The model has to learn the subtle, fluid transitions between these mouth shapes to avoid that jarring, robotic look we've all seen before. This skill is honed by training the AI on massive libraries of video and audio, letting it absorb the tiny nuances of how real people talk.

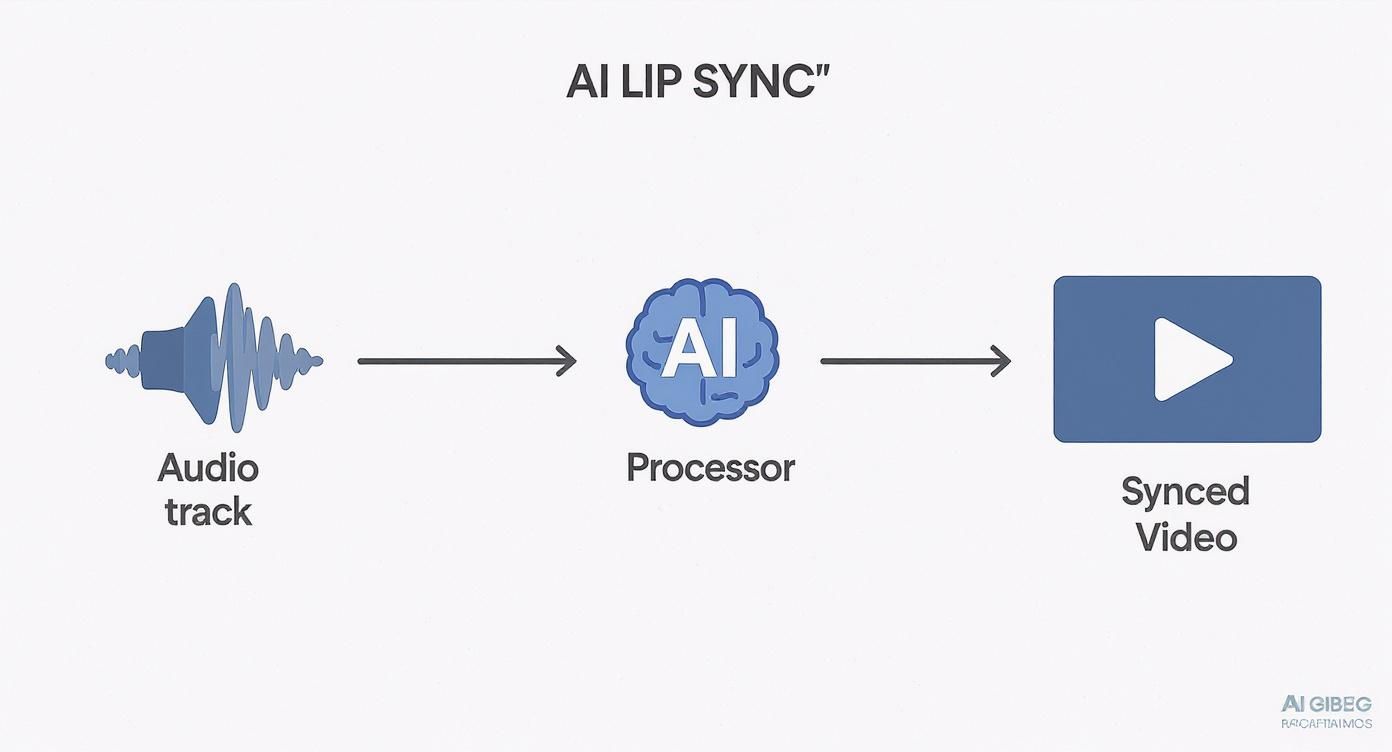

The basic workflow looks something like this:

This diagram gives you a bird's-eye view of how raw audio is processed by an AI engine to create a video where the speech and mouth movements are perfectly in sync. This is the heart of any modern lip-sync system.

Generating Lifelike Movements

With the sound-to-shape map ready, the final act is bringing the video to life. This is often handled by sophisticated models like Generative Adversarial Networks (GANs). A GAN is actually two AIs working in tandem—and against each other.

- The Generator: Its job is to create the new mouth movements for the video.

- The Discriminator: This one acts as the critic, constantly comparing the Generator's work against real-world video to find any flaws.

This back-and-forth, this constant cycle of creation and critique, is what pushes the Generator to get better and better. It keeps refining its output until the lip movements are so natural they're virtually indistinguishable from a real person speaking.

In industries like animation, this has been a complete game-changer. AI now automates the incredibly tedious job of manually matching dialogue to a character's mouth. According to a recent analysis of AI's role in animation workflows, this technology is slashing production time while delivering incredible accuracy. The result isn't just about getting the timing right; it’s about capturing the feeling behind the words. If you're curious about that, you can explore text-to-speech with emotion to enhance digital voices and see how deep this technology goes.

How AI Lip Sync Is Changing The Game Across Industries

You really start to grasp the power of AI lip sync when you see it in action across different fields. This isn't just a cool tech demo anymore; it’s a practical tool that’s fundamentally changing how we create, localize, and experience video content. Its fingerprints are everywhere, from Hollywood sets to corporate training modules.

The most obvious impact is in the world of entertainment. For as long as I can remember, dubbing foreign films meant putting up with a major compromise: the actor’s lips would never quite match the new dialogue. It was distracting. AI lip sync is finally closing that gap, giving us a seamless experience that honors the original performance while opening it up to a global audience.

But this isn't just about movies. The video game industry is also jumping on board to build more believable and immersive worlds.

A New Era for Entertainment and Media

In gaming, non-player characters (NPCs) can now deliver dialogue with facial expressions that perfectly match what they're saying, no matter the language. This small detail adds a massive layer of realism, making virtual worlds feel truly alive. It also frees up animators from the tedious, manual grind of syncing dialogue, letting them pour their energy into the creative side of bringing characters to life.

The ripple effect on global media distribution is huge. Traditionally, producing high-quality dubs for multiple languages was a slow, expensive nightmare.

AI lip sync completely changes the economics. It slashes the time and cost involved in localization, allowing a single video to be accurately dubbed and synced for dozens of languages in a fraction of the time. This is blowing the doors open to new markets for studios and streaming platforms alike, directly shaping a global localization market set to be worth $5.7 billion by 2030.

If you want to dive deeper into this process, our guide to dubbing a video for global reach breaks down exactly how these tools are demolishing language barriers.

Fueling Business and Creator Content

The applications don't stop at entertainment, not by a long shot. In the business world, AI lip sync is becoming an indispensable tool for global operations.

- Corporate Training: Imagine creating one polished training video and seamlessly localizing it for teams worldwide. Everyone gets a consistent, professional learning experience with perfect lip sync.

- Marketing Campaigns: Brands can now generate personalized video ads where the speaker addresses customers by name, with lip movements that match the custom audio perfectly. That level of personalization makes marketing feel more human and seriously boosts engagement.

- Customer Support: When an on-screen presenter in a tutorial has natural, synced speech, the information just feels more trustworthy and effective.

And for individual creators on platforms like YouTube and TikTok, AI lip sync is a genuine game-changer. It lets them fix audio flubs in post-production without reshooting. Even better, it gives them the power to create multilingual versions of their content, massively expanding their audience with very little extra work.

Comparing the Best AI Lip Sync Tools

The world of AI lip sync is exploding, and with it comes a flood of new tools. It's a great problem to have, but picking the right one can feel a bit overwhelming. The best tool for you really boils down to what you're trying to accomplish—whether you're a YouTuber looking to expand your audience, a professional animator working on a feature film, or a developer building something from the ground up.

Let's break down the options to help you find the perfect fit.

https://www.youtube.com/embed/OGdJjrxiswc

Some tools are all about simplicity and speed, offering slick web interfaces where you can get the job done in minutes. Others are built for power users, providing robust APIs that plug directly into complex production pipelines. It’s all about matching the tool's horsepower to your project's demands.

Think of it this way: a social media manager needs a fast, user-friendly way to dub a short video for Instagram. A video game studio, on the other hand, needs a high-precision engine to sync dialogue for hundreds of unique characters. Both are valid needs, but they require very different solutions.

Platforms for Content Creators

For most people, ease of use is everything. The tools in this category are designed to get you from A to B with as little friction as possible. You just upload your video, drop in an audio file, and let the AI work its magic.

Many of these platforms bundle AI lip sync with other useful features, like AI voice generation and automatic translation, making them a one-stop shop for localizing content. If you're curious about how that voice side of things works, you can dive deeper in our guide to AI voice actors and synthetic voices.

These tools are a game-changer for:

- Social Media Managers who need to churn out multilingual video content fast.

- E-Learning Professionals adapting training modules for international teams.

- Marketers creating personalized video ads on a massive scale.

And for those who want to get a little more specialized, platforms like Lipdub AI focus specifically on providing top-tier lip-synchronization for a really polished final product.

Feature Comparison of Popular AI Lip Sync Platforms

To give you a clearer picture, let's compare some of the leading AI lip sync tools side-by-side. This table breaks down their key features, ideal users, and pricing models, helping you pinpoint the best option for your specific needs—from quick social media clips to professional-grade animations.

Choosing the right platform often comes down to balancing simplicity with control. While a tool like HeyGen offers incredible ease of use for creators, a framework like Wav2Lip gives developers the raw power to build something entirely custom.

Advanced Tools for Professionals and Developers

Once you move past the all-in-one platforms, you'll find a different class of tools built for the pros in animation, film, and software development. These solutions offer far more granular control over the final result and are designed to integrate seamlessly into professional workflows.

The core trade-off here is complexity for power. A one-click platform is fantastic for speed, but developer-focused APIs give you the ability to fine-tune facial expressions, match emotional tones, and make frame-by-frame adjustments.

Take a look at HeyGen’s website, for instance. It’s immediately clear that their focus is on making AI avatars and video localization accessible to everyone. The entire experience is designed to be straightforward, which is perfect for creators and businesses who need polished results without a steep learning curve. This user-first approach makes it a fantastic choice for scaling video production efficiently.

What's Next for AI Lip Sync Technology?

The momentum behind AI lip sync is impossible to ignore. It’s breaking out of specialized animation studios and becoming a go-to tool in mainstream content creation. This isn’t some passing fad; we're talking about a market expanding at a blistering pace, all thanks to our endless appetite for globalized, engaging media.

Everywhere you look, the demand is growing. Streaming services need to localize content for audiences worldwide, and game developers are on a constant quest to build more believable virtual worlds. This universal need for high-quality, affordable synchronization is what’s pushing the tech forward so quickly.

The numbers tell a pretty clear story. In 2024, the U.S. lip-sync market was valued at around $0.39 billion. But hold on, because it’s projected to rocket to $1.65 billion by 2034, which is a compound annual growth rate of 15.5%. North America is currently the biggest player, holding over 37.3% of the market share, largely because of its mature media industry and early embrace of the tech in animation and gaming. If you're interested in the nitty-gritty, you can dig into the full market analysis on lip-sync technology trends.

Emerging Innovations on the Horizon

So, where is this all heading? Well, the evolution of AI lip sync is on track to unlock some truly dynamic applications that will fundamentally change how we engage with video. The technology is making a huge leap from a tool for pre-recorded media to something that can work in the moment, transforming live events.

Keep an eye on these key developments defining the next generation of lip-sync tech:

- Real-Time Lip Sync for Live Broadcasts: Picture this: you’re watching a live international press conference. Instead of relying on a delayed translator, you simply select your language, and the speaker's lips move perfectly in sync with the new audio, all in real time. This is the kind of thing that will completely erase language barriers for global live audiences.

- Integration with AI Voice Cloning: The next logical step is to pair flawless lip sync with incredibly realistic AI-generated voices. This combination allows for the creation of fully believable digital avatars that can say anything, in any voice or language, paving the way for everything from virtual brand ambassadors to highly personalized digital assistants.

- Hyper-Personalized Video at Scale: Imagine a brand sending out thousands of video messages where an AI spokesperson addresses each customer by name, with perfectly synchronized speech. This level of personalization makes marketing feel less like a broadcast and more like a one-on-one conversation.

The future of AI lip sync isn't just about dubbing movies better. It's about making video communication more personal, accessible, and immediate than ever before.

What we're seeing is a fundamental shift. AI lip sync is moving from a handy post-production tool to an interactive, real-time communication platform. As the algorithms get smarter and the hardware gets faster, the line between what's real and what's AI-generated will only get fuzzier, opening up a world of new possibilities for creators and audiences alike.

Using AI Lip Sync Responsibly

There's no denying how powerful AI lip sync is, but like any game-changing tool, it comes with some serious ethical strings attached. As this tech gets easier for everyone to use, we all need to be keenly aware of how it could be misused.

The very same tool that lets you flawlessly dub a training video into another language could also be used to create a convincing deepfake to spread misinformation. That’s why using it responsibly is everything.

At the center of this whole discussion are two simple, but critical, ideas: transparency and consent. If you're going to alter what someone looks like or sounds like, your audience has a right to know. Trying to pass off AI-modified video as the real deal just destroys trust and makes it harder for anyone to tell what’s real and what isn’t.

Just as important, you can’t just grab someone’s image or voice and start tweaking it without their direct permission. That's a huge ethical line to cross. Responsible creation starts with making absolutely sure you have the rights to use and modify the content in the first place.

Best Practices for Ethical Creation

So, how do you tap into the benefits of AI lip sync while keeping your integrity intact? It comes down to having a clear set of ethical ground rules. Following these practices doesn't just cover your bases—it helps you build a much stronger, more trusting relationship with your audience.

Here are a few practical steps to put into action:

- Label Your AI Content Clearly: Don't be shy about it. Always disclose when a video has been altered with AI. A simple watermark, a disclaimer in the video description, or even some text on the screen does the trick.

- Get Explicit Consent: This one's non-negotiable. Before you touch a person’s likeness or voice, you need their clear and informed permission to do so.

- Enhance, Don't Deceive: Stick to using AI lip sync for good. Use it to make content more accessible with dubbing, fix minor audio mistakes, or bring cool animated characters to life. Steer clear of any use that's designed to mislead people or impersonate someone.

By making transparency and consent your top priorities, you establish yourself as a creator people can trust. The whole point of this technology should be to break down barriers and unleash creativity, not to make reality a guessing game.

Ultimately, the buck stops with us—the creators, marketers, and publishers—to set the standard. By adopting these ethical practices, we can ensure AI lip sync technology remains a force for good, pushing innovation forward while respecting people and earning our audience's trust.

Common Questions About AI Lip Sync

As AI lip sync starts showing up everywhere, it's totally normal to have questions. It’s a fascinating mix of complex AI and creative video work, so let's tackle a few of the most common curiosities to give you a clearer picture of what this tech can actually do.

Just How Good Is It? Is It Noticeable?

Honestly, today's AI lip sync is shockingly good. We're at a point where the best tools can produce results that are virtually indistinguishable from a real person talking. They work by breaking down audio into phonemes—the tiny, distinct sounds of speech—and matching the mouth movements perfectly, frame by frame.

But, and this is a big "but," the quality of your final video really hinges on what you feed the AI. For the best, most seamless results, you need clean audio and a high-quality video. Things like a lot of background noise, mumbled words, or a speaker who keeps turning their head away from the camera can trip up the AI and make the final sync less believable.

Does It Work for Languages Besides English?

Absolutely, and that's really where this technology shines. The top AI lip sync platforms have been trained on enormous datasets packed with speech from dozens, if not hundreds, of languages. This is what allows the AI to create natural-looking lip movements for a French or Japanese audio track, even if the person on screen originally spoke English.

This multilingual capability is what makes AI lip sync a game-changer for anyone creating content for a global audience. It lets you break down language barriers and connect with people in a way that feels genuine, avoiding the awkward, disconnected feel of traditional dubbing.

Do I Need to Be a Tech Wizard to Use It?

Not in the slightest. While the technology under the hood is incredibly complex, the tools themselves are built for regular people, not engineers. Most platforms have a simple, drag-and-drop interface that anyone can master in a few minutes.

For instance, tools like Rask AI and HeyGen let you upload your video, drop in your new audio file, and let the AI handle the rest with a few clicks. This ease of use means you don't need a degree in video editing or animation to add powerful AI lip sync to your projects. It’s truly accessible to everyone.

Ready to transform your content with perfectly synchronized, multilingual video? Aeon automates the entire video creation process, turning your ideas into engaging, professional-quality videos effortlessly. Discover how Aeon can scale your video strategy today.