The Evolution of Text to Speech Emotion Technology

Remember those old robotic, monotone computer voices? Early text to speech (TTS) could convey words, but lacked the crucial human element of emotion. This often resulted in a detached and unnatural listening experience. But the field of emotional TTS has come a long way. We’ve moved beyond those robotic tones and toward AI voices capable of conveying genuine emotion.

This progress is largely thanks to significant leaps in artificial intelligence (AI) and machine learning. The emergence of neural networks in the early 2000s marked a crucial turning point. These networks enabled the creation of more complex TTS models capable of generating realistic and expressive synthetic speech. This advancement significantly enhanced the emotional depth of TTS, allowing systems to capture nuances like pitch, intonation, and rhythm previously absent in older AI voices. The rise of neural networks has been critical in allowing synthetic speech to evolve. Learn more about this evolution here: Text-to-Speech Evolution

From Monotone to Multifaceted: Milestones in Emotional TTS

The journey from robotic to relatable has involved several key milestones. Early TTS systems struggled to replicate even basic emotions. Ongoing research, however, led to breakthroughs in understanding the acoustic patterns linked to various emotional states. For example, systems began to recognize that higher pitch and faster tempo often indicate excitement, while lower pitch and slower tempo might convey sadness.

This progress has empowered developers to create voices expressing a broader spectrum of emotions with increasing accuracy. Introducing Real Emotion Ultra-Realistic Text-to-Speech for Your Videos The nuances of emotional expression, like a slight tremble indicating fear or a gentle lilt suggesting joy, are also being explored. As AI continues to integrate into various sectors, considering its impact on education provides valuable context. Explore more about AI in education here: AI applications in education.

The Role of Psychology and Context in Emotional TTS

Furthermore, understanding the psychology of emotion has been vital. Researchers realized that simply tweaking acoustic parameters wasn’t enough. True emotional expression hinges on context awareness. The same sentence, spoken with identical intonation, can convey different emotions based on surrounding words and the overall situation.

This realization led to the creation of sophisticated models that consider the broader context of the text. These models analyze the semantic meaning of words and phrases to better grasp the intended emotion. This contextual understanding allows TTS to become more intuitive, conveying the appropriate emotional response in any given situation. The result is a more engaging and immersive experience for the listener.

Behind the Scenes: How Text to Speech Emotion Actually Works

What’s happening when an AI voice expresses happiness, sadness, or excitement? This section unveils the technology behind emotionally intelligent synthetic speech.

Decoding Emotional Speech: Training AI on Human Voices

These systems learn from vast libraries of recorded human speech, each sample tagged with a specific emotion. This training allows the AI to recognize the acoustic fingerprints of each emotional state. For instance, a higher pitch and rapid-fire delivery might signal excitement, while a lower pitch and slower tempo could indicate sadness. Just like us, the AI learns to interpret these vocal cues through repeated exposure.

Neural Architectures for Emotion Modeling

At the heart of emotional TTS systems are sophisticated neural networks designed specifically for emotion modeling. These networks don't just convert text to sound; they infuse the output with emotional depth. This involves manipulating acoustic elements like pitch, rhythm, and intensity in real-time, a complex task far beyond simple pronunciation.

Context Awareness and Cultural Understanding: Beyond Basic Parameters

But emotion isn't just about tweaking acoustic parameters. Context is key. The same sentence can carry vastly different emotional weight depending on the surrounding text and overall situation. Advanced emotional TTS systems employ context awareness to understand the nuances of meaning and express emotion appropriately.

Furthermore, conveying emotion authentically requires cultural sensitivity. A joyful tone in one culture might be misinterpreted in another. Ongoing research focuses on integrating cultural understanding into emotional TTS. This research promises to make synthetic speech more expressive and inclusive across diverse languages and cultures.

From Robotic to Relatable: The Emotional Voice Journey

The journey of synthetic voices, from their initial robotic tones to the emotionally resonant voices we hear today, is a testament to technological advancement. Early text to speech (TTS) systems struggled to convey emotion, resulting in a detached and less engaging listening experience. The desire for more human-like expression in synthetic speech drove innovation in the field.

This evolution from basic sound to nuanced emotion has been a long time coming. The history of TTS stretches back to the early 20th century with the first electronic speech synthesis systems. However, the significant leap towards incorporating emotional expression occurred only recently. Prior TTS systems were limited to flat narration, lacking the emotional depth of human voices. This was especially noticeable in audiobooks and educational materials where emotional connection is crucial for engagement. Explore the rich history of TTS.

Conquering the Uncanny Valley: Making Synthetic Emotion Authentic

A major hurdle in developing emotional TTS was overcoming the "uncanny valley." This term describes the unsettling feeling we get when something appears almost human, but not quite. Early emotional TTS attempts often fell into this trap, creating voices that sounded artificial and sometimes disturbing. It became clear that simply mimicking human speech wasn't enough; truly understanding and replicating the nuances of emotional expression was essential.

The Role of Psychology and Linguistics

To overcome this challenge, researchers delved into the psychology of human emotion. They found that emotional expression is far more complex than altering pitch and speed. Subtleties like timing, breathing, and even vocal fry significantly influence how we perceive emotion. Furthermore, identical words spoken with the same intonation can have different meanings depending on the context. TTS systems needed to become interpreters of language and emotion, not just speakers. Linguistic research was key in understanding how words and phrases contribute to the overall emotional impact.

Interdisciplinary Collaboration and Breakthroughs

The convergence of linguistics, psychology, and computer science was pivotal. Experts from these fields collaborated to create sophisticated algorithms that captured the complex subtleties of human speech. This interdisciplinary approach yielded breakthroughs, leading to emotionally intelligent voices capable of expressing a wide range of feelings authentically. These advancements have transformed TTS technology, making it a powerful tool for communication and storytelling.

Text to Speech Emotion: Transforming Real-World Experiences

Emotional synthetic voices are making a significant impact across various industries. It's not just about the technical aspect; it's about forging genuine connections. This section delves into the practical uses of text to speech emotion (TTS), exploring how it's being used by those who have adopted this technology.

Healthcare: A Therapeutic Connection

Healthcare professionals are discovering the therapeutic potential of emotionally responsive voices. Patients form stronger bonds with technology that recognizes and reacts to their emotional states. This connection fosters improved patient engagement and contributes to better treatment results.

Education: Boosting Learning Outcomes

In education, incorporating appropriate emotional expression into learning resources has demonstrated a 37% improvement in learning outcomes. A captivating, expressive voice grabs students' attention, making learning more enjoyable and effective, and leading to better knowledge retention. For instance, a touch of enthusiasm when introducing new concepts can spark curiosity, while a calming tone during complex explanations can alleviate anxiety and foster understanding. For more insights into enhancing content with AI, explore this guide on transforming content with text-to-video AI.

Entertainment: Dynamic Character Voices

The entertainment world is also reaping the benefits of emotional TTS. Game creators are utilizing it to develop dynamic character voices that react emotionally to player decisions. This creates a more immersive gaming experience, making characters feel authentic and engaging. The shift from robotic to relatable voices continues with new models like Sprello.ai's Pulse, an expressive AI influencer model. This ongoing development highlights the growing need for emotionally nuanced synthetic speech.

Customer Service: Empathetic Virtual Assistants

Customer service is another domain where emotional TTS excels. Companies are implementing empathetic virtual assistants capable of understanding and responding to customer frustration or anxiety. This significantly elevates user satisfaction and promotes higher customer retention. A virtual assistant expressing empathy during a complaint can de-escalate tense situations and cultivate stronger customer relationships. A cheerful, welcoming voice enhances the overall customer experience.

To further illustrate the impact of emotional TTS across various sectors, the following table provides a detailed overview:

The table below, "Emotional TTS Impact Across Industries," reveals how different sectors are leveraging text to speech emotion technology and the measurable benefits they're achieving.

As shown in the table, the potential of emotional TTS is vast, but careful consideration of implementation challenges is crucial for success.

Implementation Challenges and Best Practices

While emotional TTS offers clear advantages, effective implementation presents challenges. Finding the right level of emotional expression is key. Too much can seem artificial or inappropriate, while too little negates the benefits. Careful consideration of the context and target audience is vital. Another challenge lies in aligning emotional expressions with brand identity and messaging.

Organizations are addressing these challenges through rigorous user testing and close collaboration with voice actors and developers. This process helps pinpoint the most effective emotional expressions for various contexts and goals. Best practices for creating authentic and engaging emotional voices that resonate with target audiences are also being developed. This meticulous approach ensures that emotional TTS enhances, rather than detracts from, the user experience.

Measuring What Matters: The Impact of Emotional AI Voices

Are emotionally expressive synthetic voices just a nice addition, or do they truly change how we interact with technology? The answer lies in the compelling data emerging from real-world use cases.

Quantifying the Impact of Emotion in TTS

Let's explore how emotional expression in AI voices affects engagement, information retention, and user trust. Think about the difference between a flat, robotic voice delivering a news report and a warm, empathetic voice reading a bedtime story. The emotional context significantly colors how we receive the information.

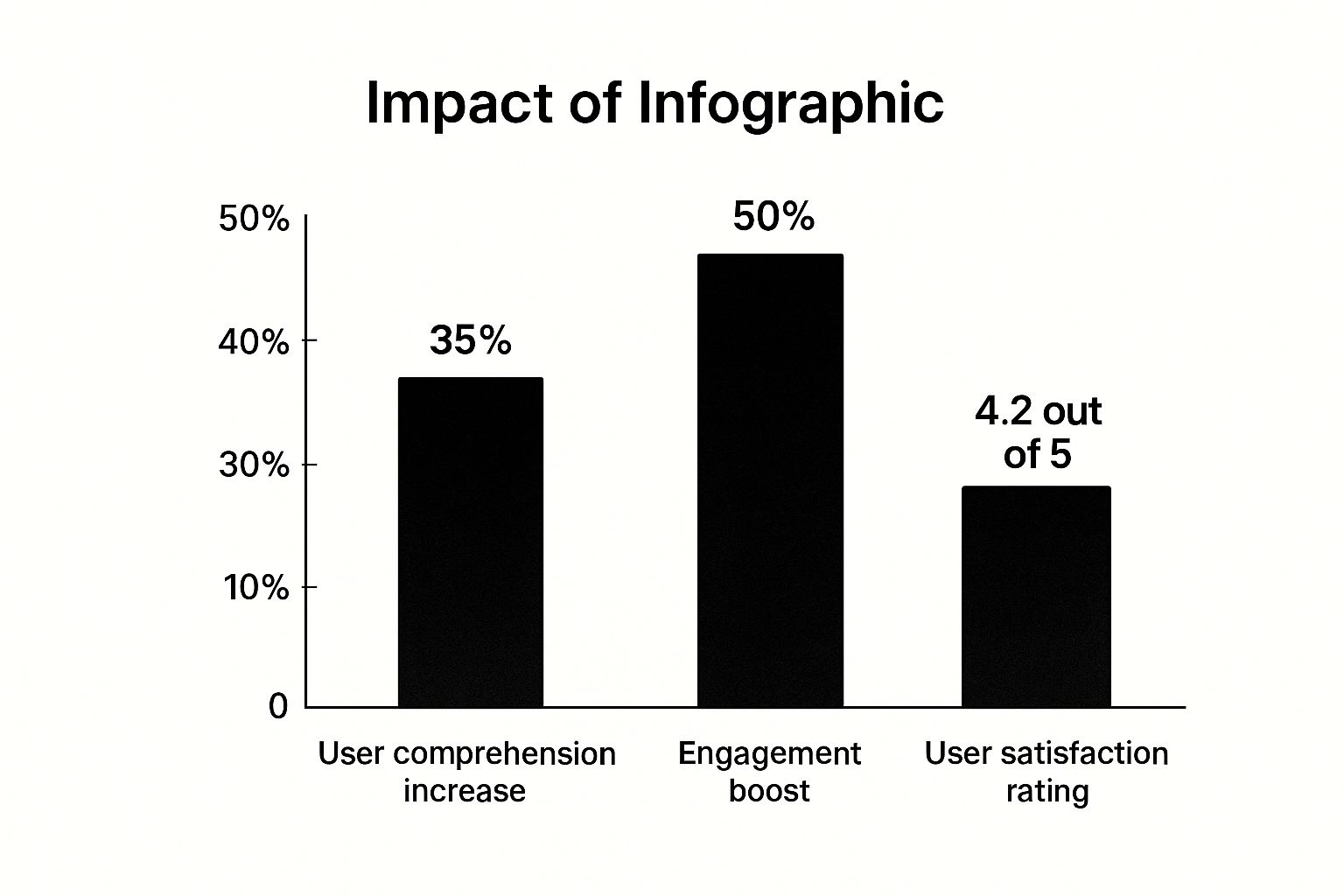

The infographic below visualizes the positive effects of emotional text to speech, comparing improvements in user comprehension, engagement, and overall user satisfaction.

As the data clearly shows, adding emotion to text to speech significantly improves user comprehension by 35%, boosts engagement by 50%, and leads to an average user satisfaction rating of 4.2 out of 5. This highlights the substantial impact of emotion on the overall user experience.

Furthermore, emotional AI voices are producing impressive results in increasing listener engagement. The use of emotional expression in text to speech has resulted in a noticeable improvement in user interaction.

The Power of Emotional Congruence

Simply adding emotion isn't the solution. Emotional congruence, meaning matching the voice's emotion to the content itself, is vital for maintaining credibility. Imagine a cheerful voice delivering tragic news—the disconnect would immediately undermine trust. Careful thought must be given to how emotional expression is applied. The aim is to enhance the message, never to clash with it.

The following table, "Emotional vs. Standard TTS: Performance Comparison", provides concrete data illustrating how emotionally enhanced TTS surpasses standard systems across key metrics.

This data reveals the significant improvements offered by Emotional TTS. The gains in comprehension and engagement are substantial, while user satisfaction also sees a considerable boost.

Measuring ROI: Demonstrating the Value of Emotional AI Voices

Ultimately, businesses need to see a return on their investment in emotional AI voices. This can be achieved by tracking metrics like conversion rates, customer satisfaction scores, and even employee engagement. For example, in customer service, an empathetic AI voice can calm tense situations, resulting in higher resolution rates and greater customer loyalty. You can find more information in this article: From Flat Narration to Feeling Voices.

In e-learning, an encouraging tone can increase student motivation and improve learning outcomes. By measuring these improvements, companies can demonstrate the real-world value of emotional TTS technology. This data-driven approach justifies the investment and informs future development in this exciting field.

The Authenticity Challenge in Text to Speech Emotion

Creating synthetic voices that convincingly express emotion, without sounding robotic, remains a significant challenge. Why is genuinely replicating human emotion so difficult? This difficulty stems from several factors, including the subjective nature of emotions and the subtle timing involved in their expression.

Cultural Nuances and Subjectivity in Emotional Expression

One major hurdle is the cultural variations that influence how we express and understand emotion. What conveys happiness in one culture might be interpreted differently in another. This makes creating universally understood emotional cues a complex task. For instance, certain tones of voice can signify sarcasm in one language but sincerity in another. Emotional TTS (Text to Speech) systems must therefore be culturally aware to prevent misinterpretations.

Even within the same culture, emotions are highly personal. Individual experiences shape how we communicate and interpret emotional cues. A seemingly simple phrase like "That's great" can express genuine excitement, polite agreement, or even sarcasm, depending on the speaker’s tone, facial expressions, and body language. Recreating this level of nuance in synthetic speech presents a formidable challenge.

The Importance of Micro-Timing in Emotional Speech

The precise timing of vocal cues plays a vital role in how we perceive emotion. Microsecond variations in pauses, inflections, and the length of syllables can dramatically affect the interpreted meaning. Consider the subtle difference between a hesitant "yes" and an enthusiastic "yes!" The timing of the vowel and the following pause can completely alter the emotional message. This demands that TTS systems possess a remarkably precise grasp of timing.

Overcoming the Obstacles: Interdisciplinary Approaches

Despite these complexities, interdisciplinary teams are making progress. By integrating knowledge from linguistics, psychology, and machine learning, they are developing more refined models. Linguists provide expertise on how language structure influences emotional expression, while psychologists offer insight into human perception and interpretation of emotion. Machine learning experts then use this combined knowledge to create algorithms that can capture and replicate these subtleties. You might be interested in: How to Make Text Videos: The Ultimate Guide to AI-Powered Video Creation

Addressing the Ethical Implications of Emotional TTS

As text to speech emotion technology evolves, ethical considerations become paramount. One central question is where emotional expression transforms into manipulation. Could emotionally persuasive synthetic voices be misused to influence people's decisions? Another ethical concern revolves around transparency. Should developers be upfront about the methods used in creating emotional TTS? Such transparency would empower users to understand how these systems function and make informed choices about their use. Finally, a critical challenge is guaranteeing that this technology enhances, not replaces, genuine human connection. Emotional TTS has the potential to improve communication and offer richer experiences, but we must remain aware of potential drawbacks.

The Future of Text to Speech Emotion: What's Next

What happens when synthetic voices become emotionally indistinguishable from human voices? This section explores emerging advancements in text to speech emotion (TTS), drawing insights from researchers and experts.

Personalized Emotional Profiles: Tailoring AI Voices to the Individual

Imagine an AI voice that understands your emotional history and adapts its expressions accordingly. Future TTS systems may utilize personalized emotional profiles, learning individual preferences and adapting to unique communication styles. This could mean a more empathetic voice during stressful conversations or a more enthusiastic tone when discussing exciting news.

This shift toward personalized expression will make interactions with AI feel significantly more natural and intuitive. Imagine a digital assistant that truly understands and responds to your emotional state, providing support and encouragement when needed.

Multimodal Emotion Systems: Integrating Voice and Visual Cues

The next stage in emotional TTS involves integrating voice with other forms of expression. Multimodal emotion systems will combine speech with visual cues like facial expressions and body language. This approach will create more immersive and realistic experiences, extending the AI's emotional expression beyond just its voice.

For example, a virtual character could express sadness through both its tone of voice and a downturned facial expression. This combination of cues creates a more impactful and believable interaction, further blurring the lines between human and synthetic communication.

Culturally Adaptive Expressions: Global Inclusivity in Emotional AI

As TTS emotion becomes more prevalent, ensuring cultural sensitivity is critical. Future systems will incorporate culturally adaptive expressions, recognizing that emotional cues vary across cultures. What signifies joy in one culture might be interpreted differently elsewhere.

This cultural awareness is essential for avoiding misinterpretations and ensuring that emotional AI is globally inclusive. By tailoring emotional expression to cultural context, TTS will facilitate more effective and appropriate communication across geographical boundaries.

Real-Time Emotional Intelligence: Adapting to User Responses

The ultimate goal of emotional TTS is to create AI that can understand and respond to our emotions in real time. Real-time emotional intelligence will allow synthetic voices to adjust their expression based on the user's reactions.

This dynamic interaction will revolutionize how we interact with technology. Imagine a virtual therapist whose voice softens upon detecting distress, or a virtual tutor who offers encouragement when a student struggles. This real-time adaptability promises more meaningful and supportive human-AI interactions. The potential benefits for fields like healthcare, education, and entertainment are substantial.

Reshaping Communication: The Implications of Advanced Emotional AI

As emotional AI advances, it will undoubtedly reshape our understanding of communication itself. The line between human and synthetic emotion may become increasingly blurred. This prompts us to reconsider the nature of connection and empathy in a world where machines can express emotions as convincingly as humans. This new frontier presents both exciting opportunities and significant challenges as we navigate the evolving landscape of communication.

Ready to create videos with emotionally resonant voices? Aeon empowers you to produce captivating content that connects with your audience on a deeper level. Visit Aeon to learn more and begin creating impactful videos today.