A lip sync application is an incredible piece of tech that makes a person's mouth movements in a video perfectly match a new audio track. Imagine making a video of someone appear to speak a completely different language, flawlessly. That's the magic here. It gets rid of the need for clunky, old-school dubbing or painstaking manual animation, creating a viewing experience that just feels right.

What Are Lip Sync Applications and Why Are They a Big Deal?

At its heart, a lip sync app tackles a core problem in video: making spoken words look real. Our brains are hardwired to notice when someone's lip movements don't match what we're hearing, even if it's off by a split second. That tiny disconnect can make an otherwise great video feel cheap or unprofessional, pulling viewers right out of the moment.

This technology automates the tedious, frame-by-frame work of aligning mouth shapes to sounds. It analyzes an audio file, identifies the specific sounds being made, and maps them to the correct visual mouth shapes. The result is fluid, believable speech for any person or character, and the impact is huge, especially now that video is king for engaging audiences.

The Rush for More Realistic Digital Content

The demand for high-quality video that can be produced quickly and at scale is pushing this technology into the spotlight. In 2024, the global market for lip sync automation hit around $412 million, and it's on a steep climb, projected to reach $2.31 billion by 2033. That’s a growth rate of about 21.2% a year, fueled by our appetite for hyper-realistic animation and immersive digital worlds. You can dive deeper into these market growth insights at marketintelo.com.

But this isn't just about making cartoons or video game characters talk. It's about tearing down communication walls and forging stronger connections with people. A solid lip sync application is a game-changer for:

- Taking Content Global: You can dub videos into dozens of languages without losing the original speaker's authentic emotion and performance.

- Smarter Production Budgets: It drastically cuts down the costs associated with hiring voice actors, booking dubbing studios, and enduring long post-production edits.

- Boosting Viewer Engagement: It helps create content that feels more personal and trustworthy, whether it's a customized marketing video or an interactive product demo.

When what you see and what you hear are perfectly in sync, the content just clicks. It feels authentic. This builds trust with your audience and makes your message stick.

In the end, this technology is far more than a cool special effect. It’s a strategic asset for creators, advertisers, and businesses who want to produce top-tier, localized video for a global audience. By eliminating the old technical and financial barriers, it unlocks brand new ways to connect with people in a way that feels genuinely human.

To break it down, here’s a quick overview of what this technology is all about.

Lip Sync Technology At a Glance

The table below summarizes the core ideas behind lip sync applications, highlighting what they do, why it's beneficial, and who uses them the most.

This snapshot really shows how lip sync technology is becoming a go-to tool for anyone looking to create more compelling and scalable video content.

How AI Pulls Off Seamless Lip Syncing

Ever wonder how modern lip sync applications work their magic? It’s not just clever video editing—it's a sophisticated process run by artificial intelligence. Think of the AI as a master translator, but instead of converting one language to another, it turns sound into sight. It meticulously breaks down an audio track and rebuilds it into believable, fluid facial animation.

The whole thing kicks off the moment an audio file is introduced. The AI’s first job is to "listen" closely, breaking down the words into their smallest acoustic building blocks. It doesn't hear "words" the way we do; it identifies phonemes.

A phoneme is the tiniest unit of sound that changes a word's meaning. For instance, the word "pat" has three phonemes: "p," "a," and "t." A well-trained AI, fed with massive linguistic datasets, can pinpoint these individual sounds with incredible accuracy, even when dealing with different accents or speech patterns.

From Sound to Shape: The Phoneme-Viseme Connection

Once the AI has its list of phonemes from the audio, the next step is to translate them into visual mouth movements. This is where the viseme comes into play. A viseme is simply the visual version of a phoneme—it’s the distinct mouth shape we create when making a specific sound.

For example, the sounds for "p," "b," and "m" all require you to close your lips, so they all belong to the same viseme group. The AI works like a digital linguist, using a detailed map that connects every identified sound to its corresponding mouth shape. This phoneme-to-viseme mapping is the bedrock of believable lip sync; get it wrong, and the whole illusion falls apart. To get a better feel for the underlying tech, it helps to understand how an AI Voice Generator constructs synthetic speech from the ground up.

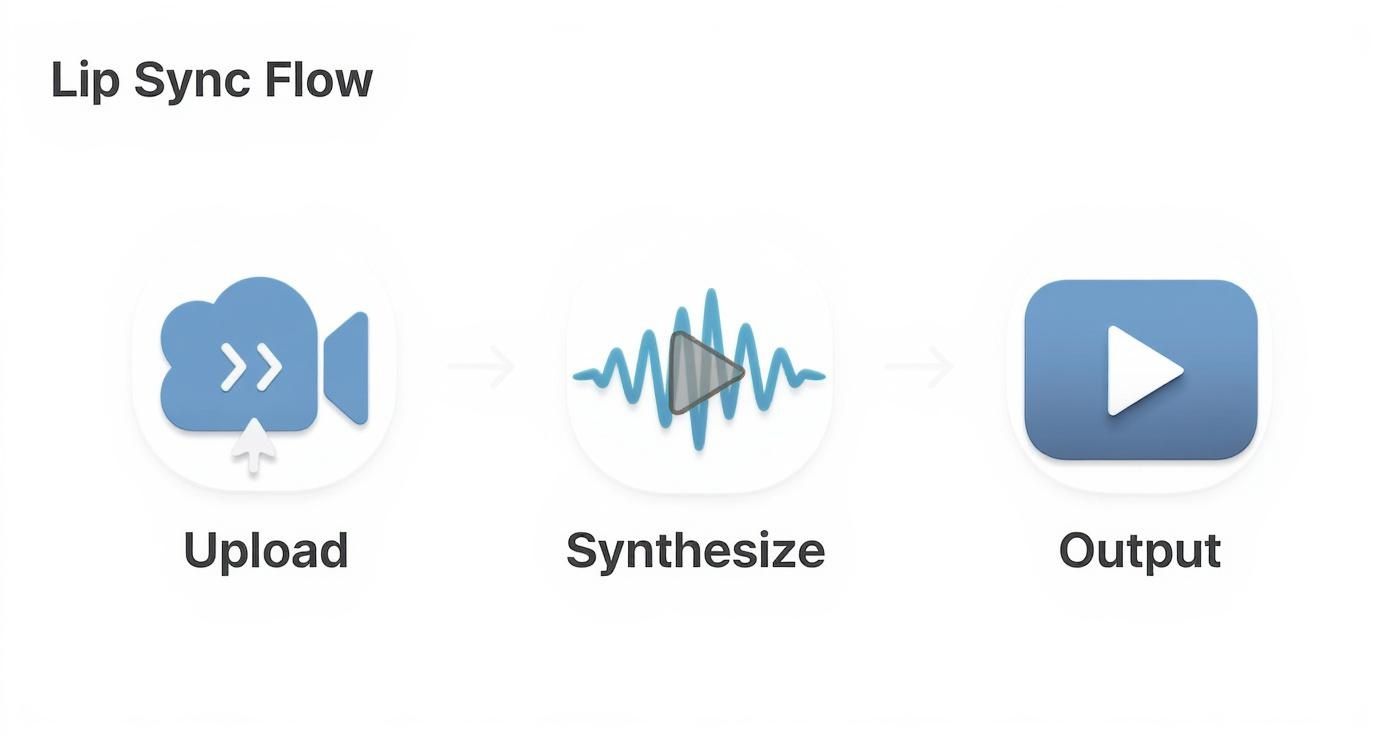

The infographic below gives a bird's-eye view of this workflow, from the initial content upload to the final, perfectly synced video.

This visual breaks down the complex AI pipeline into three main stages, showing the journey from raw materials to a polished, ready-to-watch final product.

Bringing a Face to Life With Precision

With the right sequence of mouth shapes lined up, the final piece of the puzzle is the animation itself. This is so much more than just slapping static images onto a face. A top-tier lip sync application uses powerful animation engines to create buttery-smooth transitions between each viseme, just like a real person speaking.

The AI looks at the timing and intensity of the audio to drive the animation's speed and subtlety. A loud, boisterous word will trigger a wider, more pronounced mouth shape, while a soft whisper will create a much smaller, more delicate movement. The system also intelligently blends visemes together, so you don't get that jerky, robotic look. One shape flows naturally into the next.

This intricate dance has a few key partners:

- Audio Analysis: The AI engine meticulously identifies the sequence of phonemes in the audio file.

- Viseme Mapping: It matches every single phoneme to its corresponding visual mouth shape (the viseme).

- Facial Rigging: The AI then maps these visemes onto a 3D model of the speaker's face, getting it ready for animation.

- Animation Synthesis: Finally, the engine generates the animation, ensuring every mouth shape is perfectly timed to the audio track with seamless transitions.

The real goal isn't just to match shapes to sounds. It's to create a performance that feels natural and emotionally real. The best systems consider the cadence and energy of the voice to produce animation that is truly lifelike.

This fully automated process is what lets a lip sync application turn a static video into a dynamic, compelling piece of content for any language. By deconstructing speech into its core parts and reassembling it visually, AI makes it possible to create perfectly synchronized video at a scale that was once unthinkable. You can learn more about how this technology is changing content creation in our complete guide to AI lip sync.

Real-World Uses Transforming Industries

Beyond the cool tech, the real magic of a lip sync application is how it solves huge, expensive problems for businesses. This isn't just a gimmick; it's a serious tool for unlocking new revenue, slashing production schedules, and genuinely connecting with a global audience. From media giants to online retailers, companies are starting to completely overhaul their content strategies with this technology.

The market's growth tells the story. Valued at $382 million in 2024, the AI lip sync space is on track to grow at a blistering 23.1% annually through 2033. Why? Because it finally cracks the code of dubbing content into new languages while keeping the speaker's original emotional delivery, blowing the doors open for creators to reach massive new audiences. You can dive deeper with this in-depth AI lip sync guide from truefan.ai.

What this really means is that creators and brands can now show up in international markets with a level of authenticity that was impossible before. A single marketing video can now be convincingly localized for ten different countries in the time it used to take to produce one traditional dub.

Revolutionizing Media and Entertainment

For any media company, going global has always been a race against the clock and the budget. Traditional dubbing is a painstaking, costly affair—you have to cast voice actors, book studios, and then slog through post-production. A lip sync application completely flattens that entire workflow.

News outlets can now push breaking stories in multiple languages almost in real-time, making sure their reporting hits with maximum impact. Entertainment studios can launch a new series in several territories at once, capitalizing on global marketing buzz and cutting down the risk of piracy.

- Speed to Market: A job that used to take months can now be done in days, sometimes even hours.

- Cost Efficiency: Say goodbye to massive bills for voice talent, studio rentals, and manual audio editing.

- Preserved Authenticity: The original actor’s performance—the nuance, the emotion—stays perfectly intact, just in a new language.

This shift lets media companies operate more like nimble tech startups, deploying content to meet audience demand anywhere in the world, instantly. It's a fundamental change in how content gets distributed.

This automated approach to localization isn't just about saving a few bucks; it’s about gaining a massive competitive advantage. If you want to expand your video’s international footprint, take a look at our complete guide to dubbing a video for global reach for more strategies.

Hyper-Personalization in Marketing and Ad Sales

In advertising, relevance is the name of the game. A message that lands perfectly with one audience can completely miss the mark with another. A lip sync application gives marketers the power to create hyper-personalized video ads on a scale we've never seen before.

Picture one promotional video for a new sneaker. With AI, that single video can be automatically tweaked to speak directly to different customer segments in their own language. The celebrity in the ad can be speaking Spanish, French, or Japanese, creating a powerful, personal connection that generic subtitles could never hope to match.

This is a game-changer for ad sales teams. They can now go to clients with highly targeted video campaigns that deliver much better engagement and, ultimately, more sales. We're moving away from a one-size-fits-all world and into one where thousands of tailored messages can be spun out from a single video file.

Enhancing the E-commerce Experience

Let's be honest, online shopping can feel a bit sterile. It lacks the human touch of walking into a real store. E-commerce brands are now using lip sync technology to close that gap and create a far more interactive and personal shopping journey.

By using AI-powered avatars or virtual product demonstrators, brands can offer personalized help to customers right on the website. These digital assistants can answer questions, explain complex product features, and even guide someone through the checkout process—all in the customer’s native language.

Here’s how it transforms the customer journey:

- Engaging Product Demos: An avatar of a product expert can walk a customer through the benefits of a new camera, with perfectly synced, natural-sounding speech.

- Multilingual Customer Support: Talking chatbots provide instant, localized support, boosting customer satisfaction and reducing support tickets.

- Increased Conversion Rates: When a shopping experience feels more personal and helpful, customers build trust and are far more likely to make a purchase.

At the end of the day, these applications make a digital storefront feel more human. By breaking down language barriers and adding that personal touch, lip sync tech is helping e-commerce brands build stronger relationships with their customers and drive real growth.

How To Weave Lip Sync Tech Into Your Workflow

Bringing powerful lip sync technology into your daily operations is surprisingly more accessible than most people think. The real trick is picking the right approach for what you need to accomplish. Are you looking for instant, on-demand video creation, or do you have a massive library of content to process?

Your answer will point you toward one of two main paths: using an API for real-time jobs or batch processing for large-scale projects. Getting a handle on these options is the first step toward building a smarter, more scalable video strategy that can truly connect with a global audience.

Using APIs for Real-Time Video Creation

Think of an Application Programming Interface (API) as a direct, secure line of communication that lets different software programs talk to each other. In the world of lip sync, an API integration allows your own website, app, or platform to request a perfectly synced video in real-time. It’s the key to creating dynamic content on the fly.

Imagine a shopper on your e-commerce site clicks to hear a product description in Spanish. An API call can instantly generate that video with a flawless Spanish voiceover. That's the power of real-time integration—it enables personalized experiences that happen in the moment.

This approach is perfect for:

- Personalized Video Ads: Automatically create thousands of ad variations, each with a voiceover customized for a specific audience.

- Interactive Avatars: Drive real-time conversations with digital assistants on your website, offering immediate, localized support.

- User-Generated Content: Let users on your platform create and share videos with automated dubbing features built right in.

An API gives you the ultimate flexibility, letting you embed automated lip sync capabilities directly into your existing digital products. To get even more out of your digital strategy, you can pair this with other effective AI marketing tools to create a truly cohesive system.

Batch Processing for Large-Scale Projects

While APIs are fantastic for one-off, immediate requests, batch processing is the heavy lifter for tackling huge volumes of content. This method is exactly what it sounds like: you upload a large "batch" of video and audio files—like an entire e-learning course or a full season of a show—and let the system process them all at once.

This is the go-to solution for media companies looking to localize their entire back catalog or for a global business needing to update hundreds of training videos. The market for this technology reflects its value; valued at around $1.12 billion in 2024, it's expected to jump to $5.76 billion by 2034. That growth is fueled by industries like e-commerce and advertising where authentic, synchronized lip movement is a must-have for audience engagement.

Batch processing isn't just about speed; it's about consistency. It guarantees that every single video in a huge library gets the same high-quality treatment, keeping your brand standards locked in across all your content.

By queueing up your videos for processing, your team is free to focus on more creative work while the lip sync engine does the heavy lifting in the background. Once it's done, you get a full set of perfectly synced videos, ready for distribution, saving an incredible amount of time.

Why You Still Need a Human in the Loop

No matter how you integrate this tech, quality control is everything. Even the most sophisticated AI can benefit from a quick human review. A "human-in-the-loop" (HITL) workflow creates a vital checkpoint, letting your team review and sign off on AI-generated videos before they're published.

This editorial oversight ensures the final video is not only technically perfect but also hits the right notes for your brand's voice and tone. A human reviewer can catch subtle nuances in expression or make sure the localized dialogue feels culturally authentic, not just translated. It’s that final polish that turns good automated content into great, trustworthy content.

Comparison of Lip Sync Integration Methods

Choosing the right integration method depends entirely on your project's scale, speed requirements, and existing workflow. This table breaks down the core differences to help you decide which path makes the most sense for your team.

Ultimately, the best approach might even be a hybrid one, using an API for customer-facing features and batch processing for internal content updates, all governed by a human-in-the-loop for quality assurance.

Measuring the ROI of Your Lip Sync Strategy

Bringing any new tech into the fold, especially something as powerful as a lip sync application, means you have to prove its worth. The creative "wow" factor is great, but the real test is seeing a tangible return on investment (ROI). Justifying the budget and showing stakeholders that automated localization is a strategic move, not just a gimmick, all comes down to the numbers.

Luckily, the impact of high-quality, localized video isn’t just a feeling—it shows up clearly in your key performance indicators (KPIs). We’re talking about moving past vanity metrics like raw view counts and focusing on data that directly ties to revenue and real audience growth.

Key Performance Indicators to Track

So, how do you measure success? You watch how people actually interact with your new localized content. Are they sticking around longer? Are they more engaged? And, most importantly, are they doing what you want them to do?

Here are the essential KPIs you should be watching:

- Video Completion Rate: This is a huge indicator of engagement. If you see a big jump in completion rates for your localized videos compared to the old subtitled ones, you know the message is hitting home.

- Audience Growth in New Markets: Keep an eye on the percentage increase in viewers and subscribers from specific countries after you launch dubbed content. This is a direct measure of your global reach.

- Click-Through Rate (CTR) on CTAs: For any marketing or e-commerce video, track the CTR on your calls-to-action. A higher number here means the native-language pitch is way more convincing.

- Conversion Rate on Landing Pages: This is the big one. Measure the percentage of viewers who not only click through but also complete an action, like buying a product or signing up. This is the ultimate proof of commercial impact.

The whole point is to draw a straight line from using a lip sync application to hitting your core business goals. When you can show that localized videos led to a 20% spike in conversions from a new market, the tech’s value is impossible to argue with.

Calculating Your Return on Investment

Working out the ROI doesn't have to be a headache. A simple formula can help you put a dollar value on the benefits by weighing the cost of the technology against what you save and the new money you bring in. This gives you the hard numbers you need.

You can start with a basic framework that covers the most critical financial pieces.

A Simple ROI Formula:

- Calculate Total Savings: Add up all the money you didn't spend on traditional dubbing. This includes paying voice actors, renting studios, and countless hours of post-production for every single language.

- Calculate New Revenue Generated: Figure out the extra income that came from entering new markets. You can pull this straight from the increased conversion rates and audience growth you've been tracking.

- Determine Net Gain: Subtract the cost of your lip sync application (your subscription or processing fees) from the total of your savings and new revenue.

- Calculate ROI Percentage: Just divide that Net Gain by what you spent on the tech and multiply by 100. That’s your ROI percentage.

This simple calculation completely changes the conversation. Suddenly, lip sync isn't just a creative "nice-to-have" anymore. It's a proven financial win—a powerful tool for both business growth and making your operations a whole lot smarter.

Key Ethical and Quality Considerations

Adopting powerful lip sync technology isn't just a technical upgrade; it's a commitment to using it responsibly. As these tools become more widespread, navigating the ethical side of things is every bit as important as nailing the technical workflow. This means thinking hard about consent, cultural nuances, and protecting your brand's integrity at every turn.

The power to make someone appear to say something new is a serious responsibility. The bedrock principle here must always be informed consent. This is non-negotiable, especially when you’re working with the likeness of public figures, influencers, or even your own team members. Using someone’s image without their explicit permission is a fast track to misuse, broken trust, and potential legal headaches.

On top of consent, data privacy is a huge deal. The video and audio files you're working with contain sensitive biometric data. It's absolutely critical to work with a technology partner who has ironclad security protocols. You need to be sure that your assets—and the identities of the people in your videos—are locked down and safe from any unauthorized access.

Going Beyond Literal Translation

This is where quality and ethics really start to overlap. A classic mistake is to assume that a simple, word-for-word translation will do the job. But if you want to connect with a global audience, you need to go much deeper into cultural context, regional dialects, and local customs. A message that lands perfectly in one market could easily fall flat or, worse, cause offense in another.

A thoughtful, high-quality localization process always takes these things into account:

- Cultural Sensitivity: It steers clear of phrases, jokes, or references that just don’t translate well or could be completely misinterpreted.

- Regional Dialects: It understands that the "Spanish" spoken in Spain is a world away from the Spanish used in Mexico, and it tailors the audio to match.

- Tone and Formality: The language is carefully adjusted to meet the cultural expectations of the audience you’re trying to reach.

Getting this right ensures your message feels authentic and respectful. You end up building a genuine connection with your new audience instead of just shouting translated words at them.

The goal is not just to be understood, but to be accepted. True localization makes your content feel like it was created for the local audience, not just translated at them. This builds the trust that is essential for brand loyalty.

Maintaining Brand Safety and Voice

Finally, every single piece of content you automate has to align perfectly with your brand’s voice and values. A lip sync application is a fantastic tool for scaling your content production, but that scale can't come at the expense of quality control. You absolutely need a review process to check generated videos for any errors and ensure they’re consistent with your brand messaging.

This simple step protects you from awkward phrasing or technical glitches that could chip away at your credibility. The rise of AI-generated content also means it's important to understand the broader category of synthetic media. For more context, you can explore our guide that explains what is synthetic media and its implications for creators. By putting these ethical guardrails and quality checks in place, you can confidently use lip sync technology to grow your audience while actually strengthening your brand's reputation.

Frequently Asked Questions

When you start digging into lip sync technology, a few key questions always come up. Let's walk through the most common ones to give you a clearer picture of how this all works and what it can do for you.

How Does This Technology Actually Work?

Think of it as a three-step process powered by smart AI. First, the application listens to an audio file and breaks it down into the smallest distinct sounds of a language, which are called phonemes.

Next, it matches those sounds to their visual equivalent—the mouth shapes we make when we speak. These shapes are called visemes. Finally, a powerful animation engine brings it all to life, seamlessly applying those mouth shapes to the speaker in the video. The result is new dialogue that looks natural and is perfectly timed.

Just How Good Is AI Lip Syncing?

It's surprisingly good. Modern AI systems have been trained on massive libraries of human speech and facial expressions, so they understand the subtle details that make speech look authentic. While you might spot a tiny imperfection here and there, a top-tier system produces results that are nearly impossible to tell apart from a real person speaking.

The real magic isn't just about getting the mouth shapes right. It's about creating a performance that feels genuine and emotionally connected to the new audio.

Can It Handle Different Languages and Accents?

Absolutely, and this is where the technology truly shines. A well-built lip sync application can process audio in dozens of languages and dialects. The AI is trained on diverse linguistic models, allowing it to correctly map the unique sounds of different languages. This is the key to creating content that feels local and authentic, no matter where your audience is.

Is This Complicated to Set Up?

Not anymore. Most modern platforms are built to fit right into your existing workflow without requiring a team of AI specialists. You generally have two simple options:

- API Integration: This is perfect if you want to create videos on the fly, right inside your own website or application.

- Batch Processing: Got a whole library of videos you need to update? This option lets you process large volumes of content all at once, saving a ton of time.

These flexible methods make it incredibly straightforward to add powerful lip-syncing tools to your content strategy.

Ready to take your video content global without the logistical headaches? Aeon’s AI platform automates video creation and localization, helping you produce high-quality, engaging content at scale. See how it works at https://www.project-aeon.com.