Unlocking the Future of Content: AI-Powered Creation in 2025

This listicle reveals seven key AI tools transforming content creation in 2025. Learn how AI empowers your team to produce high-quality content faster and more efficiently. From text and image generation to video and music creation, these advancements offer unprecedented creative control and scalability. Explore the top applications of AI for content creation, including GPT-based text generation, diffusion models, and generative adversarial networks (GANs), and discover how they can elevate your content strategy.

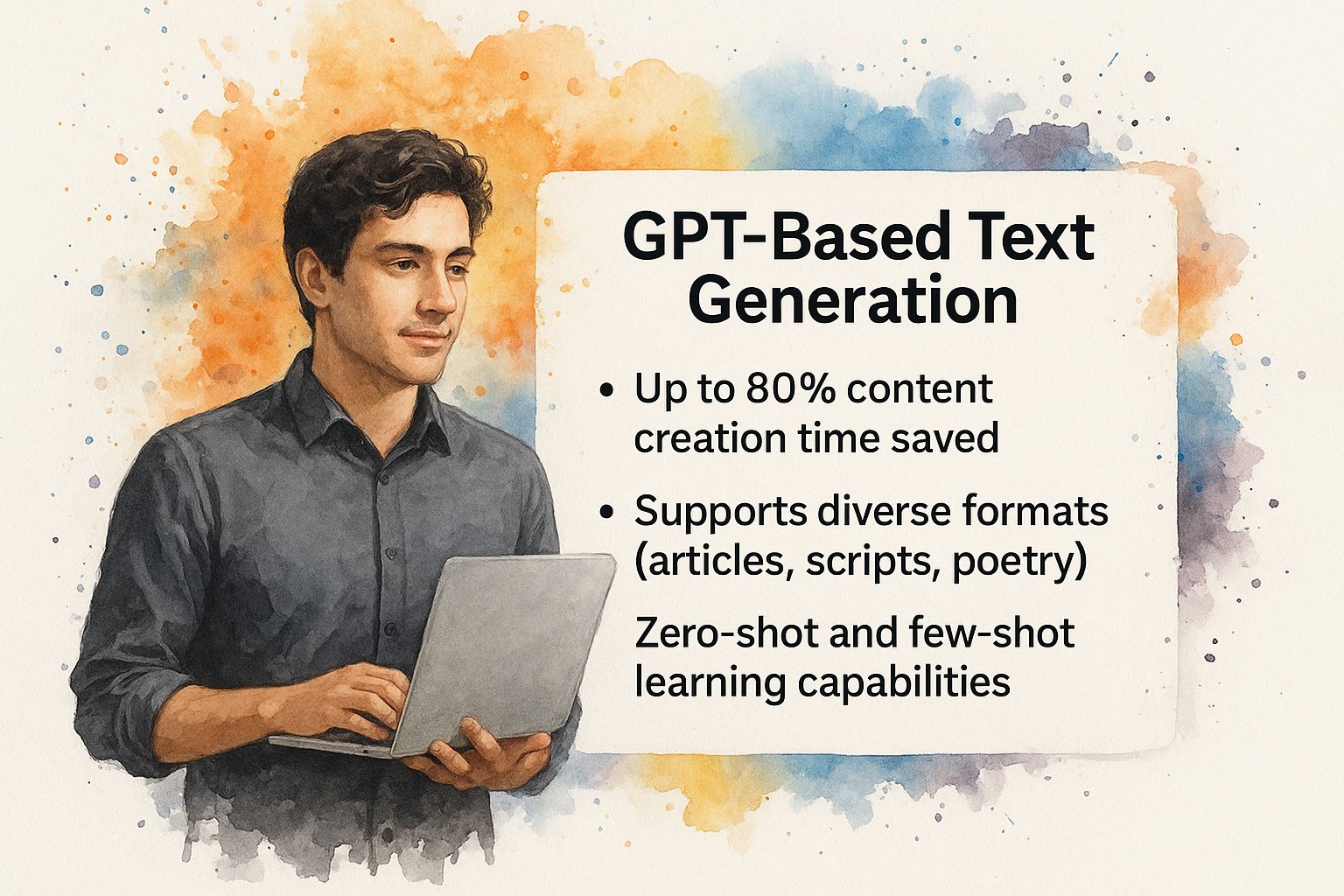

1. GPT-Based Text Generation

GPT-based text generation is revolutionizing content creation. Leveraging the power of Generative Pre-trained Transformer (GPT) models like GPT-4, this AI-driven approach produces human-like text across diverse formats. Trained on massive datasets, these models predict the next word in a sequence based on context, creating coherent and contextually relevant content from simple prompts or incomplete text. This makes them a powerful tool for anyone involved in AI for content creation.

The infographic above provides a quick reference for the key advantages of GPT-based text generation: its versatility for various content types, significant reduction in content creation time, adaptability to different writing styles, and accessibility through APIs. These core strengths drive the widespread adoption of this technology across numerous industries.

GPT models boast impressive features like zero-shot and few-shot learning, allowing them to generate different content types (articles, scripts, poetry, etc.) with minimal examples. Parameter-efficient fine-tuning options like LoRA and PEFT enable customization for specific needs while minimizing computational costs. Sophisticated prompt engineering techniques further enhance the quality and relevance of generated content.

When and Why to Use GPT-Based Text Generation:

This approach is ideal for streamlining content creation workflows, generating first drafts, overcoming writer's block, and exploring different creative avenues. Businesses can leverage GPT-based tools to automate the creation of marketing copy, product descriptions, social media posts, and more. Editorial teams can use it for drafting articles, summarizing long-form content, and even generating creative writing pieces. E-commerce teams can automate product description generation, saving valuable time and resources.

Examples of Successful Implementation:

- OpenAI's ChatGPT: Widely used for drafting marketing copy, blog posts, and other written content.

- Jasper.ai: Leverages GPT for SEO-focused content creation.

- Copy.ai: Offers a suite of GPT-powered writing tools for various content needs.

- Anthropic's Claude: Specializes in long-form content creation.

- GitHub Copilot: Uses GPT for code generation, assisting developers in writing software.

Pros:

- Highly versatile for numerous content types.

- Can reduce content creation time by up to 80%.

- Adapts to various tones, styles, and formats.

- Relatively accessible via APIs.

- Continuously improving with each new generation of models.

Cons:

- May produce factual inaccuracies or "hallucinations."

- Can reflect biases present in the training data.

- High-quality models can have significant operational costs.

- Potential for generating generic content without domain expertise.

- Ethical concerns regarding originality and transparency.

Tips for Effective Usage:

- Use detailed and specific prompts to guide the model towards desired outputs.

- Always implement human review for factual accuracy and quality control.

- Combine GPT-generated content with domain expertise for specialized topics.

- Utilize system prompts to maintain a consistent voice and style across generated content.

- Consider fine-tuning models on company-specific data for improved alignment with brand messaging.

Popularized By:

Key figures like OpenAI (creators of GPT models), Microsoft (integration into Office products), Andrew Ng (AI education and advocacy), Andrej Karpathy (former Tesla AI director), and Sam Altman (OpenAI CEO) have significantly contributed to the popularization and advancement of GPT-based text generation.

GPT-based text generation deserves its prominent place in the list of AI content creation tools because it offers unparalleled versatility, efficiency, and accessibility. While acknowledging its limitations and ethical considerations, the potential of this technology to transform content creation workflows is undeniable, making it an essential tool for businesses and individuals seeking to stay ahead in today's digital landscape.

2. Diffusion Models for Image Generation

Diffusion models represent a significant advancement in AI for content creation, specifically for generating images. They offer a novel approach to image synthesis by reversing a diffusion process. Imagine a pristine image slowly dissolving into random noise. A diffusion model learns to reverse this process, effectively reconstructing the original image from pure noise. This is achieved through iterative denoising steps, guided by learned patterns and structures from a massive dataset of images. This allows them to generate high-quality, photorealistic visuals from simple text descriptions or other input signals like sketches or other images. This innovative approach has revolutionized AI image creation, providing exceptional quality and unprecedented control capabilities, making it an invaluable tool for various content creation needs.

This method stands out for its versatility and control. Features include text-to-image generation using natural language prompts, allowing users to describe the desired image in plain English. Image-to-image transformation capabilities enable modification of existing visuals, while style transfer allows for artistic renderings in various aesthetics. Furthermore, inpainting (filling in missing parts of an image) and outpainting (extending an image beyond its original boundaries) empower users with powerful image editing tools. The level of control achievable through prompt engineering and conditioning techniques is unparalleled, allowing for fine-tuning and iterative refinement of outputs.

Diffusion models offer several advantages for content creation teams. They produce high-resolution, photorealistic images suitable for professional use across diverse platforms. The fine-grained control afforded by prompt engineering allows for precise realization of creative visions. From photorealistic product shots for e-commerce to conceptual art for editorial illustrations, a wide spectrum of visual styles and art directions can be generated. The iterative refinement process allows for continuous improvement and adaptation of outputs based on feedback. This technology democratizes professional-quality image creation, making it accessible to a wider range of users without specialized design skills.

However, diffusion models are not without limitations. They are computationally intensive, often requiring specialized hardware like GPUs for optimal performance. Training these models requires substantial datasets of images, which can be a barrier to entry for some. Furthermore, they may reproduce biases present in the training data, leading to skewed or unfair representations. Copyright and ownership of generated content remain complex legal and ethical issues that require careful consideration. Finally, the potential misuse for creating deepfakes or misleading imagery poses a significant societal concern.

Several prominent examples showcase the power and versatility of diffusion models. Midjourney is a popular choice for creating conceptual art and illustrations, while DALL-E 2 by OpenAI caters to commercial design applications. Stable Diffusion, a powerful open-source model, empowers users with greater flexibility and control. Adobe has integrated its own diffusion model, Firefly, into Creative Cloud applications, streamlining the workflow for existing users. Runway ML offers cutting-edge tools for utilizing diffusion models in video and image creation for professional production environments.

To maximize the effectiveness of diffusion models for your content creation needs, consider the following tips: Use detailed prompts with specific artistic references to guide the model towards the desired output. Experiment with different sampling methods to explore variations and discover unique visual styles. Combine diffusion models with traditional image editing tools for post-processing and refinement. Analyze and learn prompt patterns that reliably produce specific styles. Finally, create workflow integrations with your existing design software to streamline the image creation process.

The popularization of diffusion models is largely attributed to the contributions of several key players. Stability AI, the creators of Stable Diffusion, played a crucial role in making this technology accessible to a wider audience. OpenAI, the developers of DALL-E, further propelled the field with their impressive demonstrations of its capabilities. David Holz, the founder of Midjourney, contributed significantly to its adoption within the artistic community. Emad Mostaque, CEO of Stability AI, championed the open-source approach and facilitated broader access. Finally, the Runway ML team's pioneering work in generative video further expanded the horizons of diffusion models in dynamic media creation.

This technology deserves its place on this list due to its transformative impact on AI for content creation. The ability to generate high-quality, diverse, and controllable images from text prompts represents a paradigm shift in how visual content is produced. For publishers, media companies, e-commerce teams, and marketers, diffusion models offer powerful tools to enhance visual storytelling, create compelling marketing materials, and streamline the content creation workflow.

3. Neural Style Transfer

Neural Style Transfer is a powerful AI technique revolutionizing content creation by allowing users to apply the artistic style of one image to the content of another. This process uses convolutional neural networks (CNNs) to separate and recombine the content and style representations of images, creating unique visual experiences. Imagine merging a photograph of your product with the vibrant brushstrokes of Van Gogh or the dreamlike textures of a surrealist painting – Neural Style Transfer makes this possible, opening up a world of creative possibilities for AI for content creation.

This method works by analyzing the input content image and a separate style reference image. The CNN identifies the underlying features and structures of the content image while simultaneously extracting the stylistic elements (color palettes, textures, brushstrokes) from the style image. These extracted representations are then blended together, generating a new image that retains the original content but adopts the artistic flair of the style image. Modern implementations offer real-time processing capabilities and even extend this technique to video content, adding another dimension to visual storytelling.

Several notable features make Neural Style Transfer particularly appealing for content creation: the clean separation of content and style within the neural network allows for precise control over the blending process; users can apply styles from any image source, from renowned artworks to personal photographs; and adjustable parameters allow for fine-tuning the balance between content preservation and stylistic influence. Compared to newer diffusion models, Neural Style Transfer is generally more lightweight and computationally less demanding, making it readily accessible through various applications and platforms.

Examples of Successful Implementation:

- Prisma app: Popular mobile app known for its diverse artistic filters based on Neural Style Transfer.

- DeepArt.io: Web-based platform offering high-quality style transfers using famous art styles.

- Adobe Photoshop's Neural Filters: Integrates Neural Style Transfer functionality directly within a professional editing environment.

- Google's Deep Dream Generator: Creates psychedelic and abstract imagery by amplifying patterns within images.

- Artisto app: Extends style transfer capabilities to video content, enabling stylized video clips.

Actionable Tips for Using Neural Style Transfer:

- Select style images with clear, distinctive patterns: This leads to more effective and visually striking results.

- Adjust content-style weight parameters: Experimenting with these parameters allows you to fine-tune the balance between content and style.

- Pre-process images to emphasize desired features: Adjusting brightness, contrast, or saturation before applying the style transfer can improve the final output.

- Use multiple style images for more complex effects: Blending multiple styles can create unique and layered artistic interpretations.

- Combine with other editing techniques: Integrate style transfer into a broader workflow for refined and polished content.

When and Why to Use Neural Style Transfer:

Neural Style Transfer is ideal for creating eye-catching visuals that stand out from the crowd. It's particularly useful for:

- Social media graphics: Generate visually appealing content for platforms like Instagram and Facebook.

- Website banners and headers: Create artistic and engaging visuals to enhance website design.

- Marketing materials: Add a unique stylistic touch to brochures, flyers, and other promotional materials.

- Artistic exploration: Experiment with different styles and create unique digital art pieces.

- Video editing and post-production: Stylize video clips for artistic effect or thematic consistency.

Pros:

- Creates unique artistic interpretations automatically.

- Requires only style reference images, not explicit rules.

- Can apply styles from famous artworks or custom sources.

- Relatively lightweight compared to diffusion models.

Cons:

- Less flexible than modern diffusion models.

- Limited control over specific elements of the output.

- Quality highly dependent on selected style images.

- Can produce repetitive patterns or artifacts.

- Less effective for photo-realistic transformations.

Neural Style Transfer deserves its place in any content creator's toolkit for its ability to quickly and effortlessly generate visually compelling content. By leveraging the power of AI, this technique democratizes artistic expression, enabling anyone to transform their visual content with a touch of creative flair.

4. Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) represent a fascinating and powerful approach to AI for content creation. They utilize a unique two-network architecture, consisting of a "generator" and a "discriminator," locked in a continuous learning loop. The generator's role is to create content, such as images, while the discriminator's task is to evaluate the authenticity of that content, essentially trying to distinguish between real and generated outputs. This competitive dynamic, often described as a zero-sum game, pushes both networks to improve. The generator strives to produce increasingly realistic content to fool the discriminator, while the discriminator becomes more adept at identifying the subtle flaws that betray generated content. Through this iterative training process, the generator's capabilities dramatically improve, leading to the production of highly realistic synthetic content. This architecture has been particularly impactful in image generation, pushing the boundaries of what's possible with AI-driven creativity.

GANs deserve a prominent place in any discussion of AI for content creation due to their ability to generate truly novel and high-fidelity content. Key features include their two-network architecture, an unsupervised learning approach (meaning they can learn from unlabeled data), and sophisticated training methods like progressive growing that allow for the generation of high-resolution outputs. GANs also offer style-based generation, enabling control over the aesthetic qualities of the generated content, and conditional generation, where the output is guided by specific input parameters. This allows content creators to target specific needs and generate highly customized assets.

The benefits for content creation teams are numerous. GANs can produce highly realistic imagery, even with limited labeled training data. They offer impressive diversity within a given content domain, preventing repetitive or predictable outputs. And through conditional generation, they allow for a level of control over the creative process, enabling targeted content generation.

However, GANs are not without their challenges. They are notoriously difficult to train and fine-tune, often requiring significant computational resources and expertise. Training instability, including issues like "mode collapse" where the generator gets stuck producing limited variations of the same output, is a common hurdle. While offering substantial creative potential, they are generally less controllable than newer methods like diffusion models, and their effectiveness is often domain-specific. For example, a GAN trained to generate faces might not be effective at generating landscapes.

Examples of GANs in Action:

- NVIDIA's StyleGAN: Known for generating incredibly photorealistic human faces.

- Artbreeder: An online platform leveraging GANs for image creation and modification, empowering users to explore and manipulate visual aesthetics.

- ThisPersonDoesNotExist.com: A website showcasing the power of StyleGAN by generating synthetic human portraits that are indistinguishable from real photographs.

- Pix2Pix: Demonstrates the potential of image-to-image translation, allowing users to transform images from one style to another (e.g., sketches to photorealistic images).

- Deep Fashion: Focuses on generating realistic images of clothing and apparel, useful for e-commerce and fashion design applications.

Tips for Utilizing GANs:

- Start with Established Architectures: Leverage pre-trained models and architectures like StyleGAN to accelerate your development process.

- Progressive Growing: Employ progressive growing techniques for improved image quality and resolution.

- Spectral Normalization: Implement spectral normalization to enhance training stability and mitigate issues like mode collapse.

- Conditional GANs: Explore the capabilities of conditional GANs to exert greater control over the generated content.

- Post-Processing: Consider combining GAN outputs with other image processing techniques for further refinement and enhancement.

Key Figures in GAN Development:

The field of GANs has been shaped by the contributions of several key researchers, including Ian Goodfellow (the original GAN inventor), Tero Karras (StyleGAN researcher at NVIDIA), Jun-Yan Zhu (CycleGAN and Pix2Pix developer), NVIDIA Research, and Phillip Isola (Pix2Pix co-creator). Their work has laid the foundation for the continued advancement of GANs and their application in diverse fields. For publishers, media companies, e-commerce teams, and digital marketing teams looking to leverage the power of AI for content creation, GANs offer a compelling pathway to generate innovative, high-quality visuals that can capture attention and enhance engagement.

5. Automated Video Generation

Revolutionizing the landscape of content creation, AI-powered video generation is rapidly changing how we produce video content. This innovative technology uses artificial intelligence to transform various inputs, such as text, images, and even other videos, into dynamic and engaging video outputs. This makes it a powerful tool within the broader context of AI for content creation.

Leveraging a sophisticated blend of diffusion models, frame interpolation, and motion prediction algorithms, these AI systems can create animations, simulate realistic camera movements, and even generate convincing human movements. From initial concept to the final polished product, automated video generation significantly minimizes the need for extensive human intervention, opening up exciting new possibilities for content creators.

Here's a breakdown of what makes automated video generation so compelling:

Features:

- Text-to-video generation from descriptive prompts: Simply describe your desired video scene in text, and the AI will generate it for you.

- Image-to-video animation and motion generation: Bring static images to life by animating them and adding realistic motion.

- Video editing and manipulation capabilities: AI can assist with various editing tasks, streamlining the post-production process.

- Character animation and digital human synthesis: Create realistic and expressive digital characters for your videos.

- Scene extension and background generation: Expand existing scenes or create entirely new backgrounds with AI.

Pros:

- Dramatically reduces video production costs and time: Say goodbye to expensive equipment, lengthy shoots, and complex editing processes.

- Enables creation of content that would be impractical to film: Generate complex visual effects, impossible camera angles, and fantastical scenarios with ease.

- Allows rapid iteration on creative concepts: Experiment with different ideas quickly and efficiently.

- Accessible to creators without traditional video skills: No prior video editing experience is required to create compelling content.

- Supports personalization at scale for marketing: Tailor videos to individual customer segments for highly targeted campaigns. For instance, platforms like Sprello enable the creation of ultra-realistic UGC videos using AI influencers, significantly streamlining video production.

Cons:

- Extremely compute-intensive, especially for high resolution: Generating high-quality videos requires significant processing power.

- Still developing with visible quality limitations: While rapidly improving, AI-generated videos can sometimes exhibit noticeable artifacts or inconsistencies.

- Temporal consistency challenges across longer sequences: Maintaining seamless transitions and realistic motion across longer videos can be challenging.

- Physical implausibilities in complex movements: Complex movements and interactions can sometimes appear unnatural.

- Ethical concerns regarding synthetic media and deepfakes: The potential for misuse of this technology necessitates responsible development and usage.

Examples of AI Video Generators:

- Runway Gen-2: A powerful tool for commercial video generation.

- Synthesia: Specializes in AI-generated presenter videos.

- Pika Labs: Focuses on text-to-video creation.

- HeyGen: Ideal for personalized video messaging.

- D-ID: Creates talking head animations from static images.

Tips for Using AI Video Generation:

- Break complex videos into manageable sequences: This helps improve performance and maintain quality.

- Use keyframes to guide temporal consistency: Ensure smooth transitions and realistic motion.

- Combine AI generation with traditional video editing: Refine and polish your AI-generated videos with traditional editing techniques.

- Start with shorter clips while technology matures: Focus on creating shorter, high-impact videos.

- Implement human review for quality control: Ensure that the final output meets your quality standards.

Key Players in AI Video Generation:

The development of AI video generation has been spearheaded by innovative teams like the Runway ML team (pioneers in AI video generation), Google Research (developers of Imagen Video), Meta AI (behind the Make-A-Video research), and Stability AI (expanding from image to video generation). Visionaries like Cristóbal Valenzuela, co-founder of Runway, are also instrumental in pushing the boundaries of this technology.

Learn more about Automated Video Generation

Automated video generation holds immense promise for content creators across various industries, from publishers and media companies to e-commerce and marketing teams. By understanding its capabilities, limitations, and best practices, you can leverage this cutting-edge technology to create engaging and impactful video content more efficiently than ever before. This technology truly deserves its spot on any list concerning the power of AI for content creation.

6. Music and Audio Generation

Music and audio generation represents a significant leap forward in AI for content creation. This technology harnesses the power of machine learning models to compose original music, design unique sound effects, and even synthesize realistic speech. By analyzing patterns within vast datasets of existing audio, these AI systems can produce new compositions, vocals, and soundscapes ranging from simple melodies to full orchestral arrangements and nuanced voice synthesis. This offers content creators unparalleled speed and flexibility in their audio production workflow.

This innovative approach works by training algorithms on large amounts of audio data. The AI learns the underlying structures, harmonies, and rhythms present in the data. Then, based on user input such as text prompts or musical parameters, the AI generates new audio content that reflects these learned patterns. For example, you can provide a text prompt like "upbeat jazz music for a coffee shop" and the AI will generate a corresponding piece. More advanced systems allow for detailed control over aspects like tempo, key, instrumentation, and even the intended emotional tone. The ability to Learn more about Music and Audio Generation and how it can create bespoke voiceovers that match your brand is particularly appealing for marketers.

The benefits of AI music and audio generation are numerous, particularly for publishers, media companies, and e-commerce teams seeking custom, royalty-free music. It facilitates rapid iteration on audio concepts, allowing creators to quickly experiment with different musical ideas and variations on existing compositions. Personalized voice content can be generated at scale, providing unique audio experiences for individual users or targeting specific demographics. This technology drastically reduces audio production costs and timeframes, making high-quality audio content more accessible than ever before.

Several platforms already demonstrate the impressive capabilities of this technology. AIVA offers AI-composed music suitable for media productions, while Soundraw specializes in customizable royalty-free music generation. Mubert focuses on adaptive music for applications, dynamically adjusting the music to suit the user's environment or activity. ElevenLabs is renowned for its natural-sounding voice synthesis, and OpenAI's Jukebox pushes the boundaries of music generation with vocals. These examples showcase the diverse range of applications for AI in audio creation.

However, AI-generated audio isn't without its limitations. The quality can vary significantly between different systems, and while some produce impressive results, others may struggle with complex musical structures or capturing the emotional nuances of human-composed music. Voice synthesis, while increasingly realistic, still raises ethical and rights concerns, particularly regarding impersonation and potential misuse. Copyright questions surrounding the training data and the generated outputs also remain a complex issue.

To effectively leverage AI music and audio generation in your content creation workflow, consider these tips:

- Use style conditioning with reference tracks: Providing the AI with reference tracks allows you to guide its stylistic choices and achieve a desired sound.

- Combine AI generation with human arrangement: While AI can generate the raw musical material, human intervention in the arrangement and post-production process often elevates the final product.

- Leverage multi-track generation for post-production flexibility: Multi-track outputs give you greater control over individual instruments and vocals during mixing and mastering.

- Specify detailed musical parameters (tempo, key, instrumentation): Clearly defining your musical requirements helps the AI generate more tailored results.

- Implement feedback loops for iterative refinement: Experimenting with different parameters and providing feedback to the AI helps you progressively refine the output towards your desired outcome.

Music and audio generation deserves its place on this list because it democratizes access to high-quality audio content. It empowers content creators, regardless of their musical background, to produce professional-grade music and audio for a fraction of the traditional cost and time investment. While challenges remain, the potential of AI to revolutionize the audio landscape is undeniable, making it an essential tool for any modern content creation team.

7. Automated Content Curation and Personalization

In today's digital landscape, where content overload is the norm, delivering the right content to the right user at the right time is paramount. This is where AI-powered automated content curation and personalization comes into play, making it a crucial element in any modern content strategy and deserving of its place on this list of AI for content creation techniques. This technology empowers publishers, media companies, e-commerce platforms, and digital marketing teams to tailor content experiences, driving engagement and maximizing the value of their content libraries.

Automated content curation and personalization leverages AI to analyze vast repositories of existing content and individual user preferences to automatically select, arrange, and deliver personalized content experiences. Think of it as a highly sophisticated recommendation engine working behind the scenes. These systems employ a combination of powerful technologies, including recommendation algorithms, natural language processing (NLP), and behavior prediction, to ensure that each user receives the most relevant content, minimizing the need for manual curation.

How it Works: AI algorithms analyze user data, including past behavior, browsing history, demographics, and expressed preferences, to create a unique profile for each individual. This profile is then used to predict what content the user is most likely to engage with. The system automatically categorizes and tags content, generating personalized content sequences across various channels, ensuring a consistent and engaging experience. Learn more about Automated Content Curation and Personalization

Features and Benefits:

- Dynamic Content Recommendation: AI analyzes user behavior in real-time to dynamically recommend relevant content, increasing the likelihood of engagement.

- Automated Content Categorization and Tagging: AI automates the tedious process of tagging and categorizing content, enabling efficient content management and retrieval.

- Personalized Content Sequence Generation: AI creates personalized content journeys, ensuring that users are presented with a logical and engaging flow of information.

- Cross-Channel Content Orchestration: Deliver a consistent personalized experience across multiple platforms, from websites and apps to email and social media.

- A/B Testing Frameworks for Recommendation Optimization: Continuously refine recommendation strategies by testing different algorithms and parameters to maximize performance.

Examples of Successful Implementation:

- Netflix: Uses a sophisticated recommendation system to suggest movies and TV shows based on user viewing history.

- Spotify: Creates personalized playlists like Discover Weekly based on listening habits.

- Pinterest: Tailors content feeds to individual interests and preferences.

- Adobe Experience Platform: Offers powerful content orchestration capabilities for personalized marketing campaigns.

- Feedly AI: Provides personalized news aggregation based on user-defined topics and sources.

Pros:

- Personalized Experiences at Scale: Deliver unique experiences to millions of users simultaneously.

- Maximized Content Engagement and Consumption: Increase content consumption by presenting users with relevant and engaging material.

- Discovery of Hidden Patterns: Uncover user preferences and trends that would be difficult to identify through manual analysis.

- Continuous Improvement: AI algorithms learn and adapt based on user feedback, constantly improving recommendation accuracy.

- Surface Underutilized Content: Bring valuable content buried deep within large libraries to the forefront.

Cons:

- Filter Bubbles: Over-personalization can create filter bubbles, limiting exposure to diverse perspectives.

- Privacy Concerns: Reliance on user data raises privacy concerns, requiring careful data handling and transparency.

- Explainability Challenges: Complex AI models can be difficult to interpret, making it challenging to understand recommendation decisions.

- Potential for Bias: Algorithms may prioritize engagement over quality or value, potentially leading to biased recommendations.

- Integration Challenges: Integrating AI-powered curation systems with existing content management systems can be complex.

Actionable Tips for Implementation:

- Balance Algorithmic Recommendations with Editorial Oversight: Combine the power of AI with human expertise to ensure content quality and diversity.

- Implement Explicit Feedback Mechanisms: Encourage users to provide explicit feedback on recommendations to improve accuracy and personalization.

- Use Multivariate Testing: Optimize recommendation strategies by testing different algorithms and parameters.

- Combine Collaborative and Content-Based Filtering: Leverage both approaches to provide more robust and accurate recommendations.

- Introduce Controlled Randomness: Inject a degree of randomness into recommendations to avoid stagnation and introduce users to new content.

By carefully considering these pros, cons, and tips, content creators can harness the power of AI-driven automated content curation and personalization to deliver truly engaging and personalized experiences for their target audiences, ultimately achieving greater success in the increasingly competitive digital content landscape.

7 AI Content Creation Strategies Compared

Embrace the AI Revolution: Elevate Your Content Strategy

From GPT-based text generation to automated video production and personalized content curation, the tools discussed in this article demonstrate the transformative power of AI for content creation. We've explored how AI can not only streamline content workflows but also unlock new levels of creativity and audience engagement. Key takeaways include the importance of understanding different AI models like Diffusion Models, GANs, and neural style transfer, and how these technologies can be applied to generate everything from compelling images and videos to unique musical scores. Mastering these AI-driven approaches empowers content creators across industries – from publishers and media companies to e-commerce and digital marketing teams – to optimize their content strategy for maximum impact. By embracing AI, businesses can enhance efficiency, personalize the user experience, and ultimately achieve greater reach and resonance with their target audience. The future of content creation is here, and it's powered by AI.

As AI continues to reshape the content landscape, staying ahead of the curve is crucial. Ready to experience the power of AI-driven video production? Explore Aeon, a platform leveraging advanced AI technologies to automate video creation for publishers, maximizing efficiency and engagement. Visit Aeon today to discover how you can revolutionize your video content strategy.